To prevent offensive chatbot responses in support calls by implementing real-time content moderation, toxicity filtering, reinforcement learning with human feedback (RLHF), and fine-tuning on safe, customer-friendly datasets.

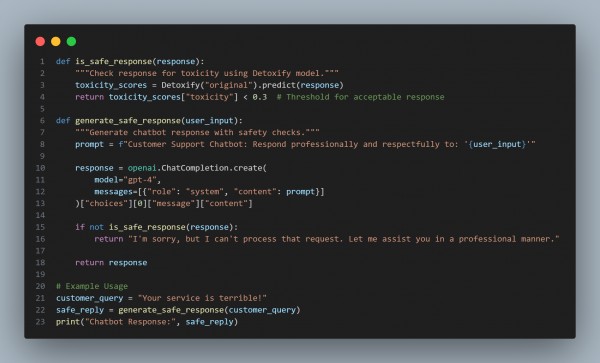

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Real-Time Toxicity Detection: Uses Detoxify to filter offensive content.

- Threshold-Based Moderation: Blocks responses exceeding a toxicity score of 0.3.

- Context-Aware Prompting: Ensures polite and professional chatbot responses.

- Failsafe for High-Risk Responses: Provides a neutral fallback if a response is unsafe.

- Integration with GPT-4 for Intelligent Replies: Enhances conversational quality while ensuring safety.

Hence, preventing offensive chatbot responses in customer support requires robust content moderation, toxicity filtering, and reinforcement learning to maintain professionalism and ensure a positive user experience.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP