You can build a PyTorch training loop for a Transformer-based encoder-decoder model by setting up forward passes, computing loss, and updating parameters in each iteration.

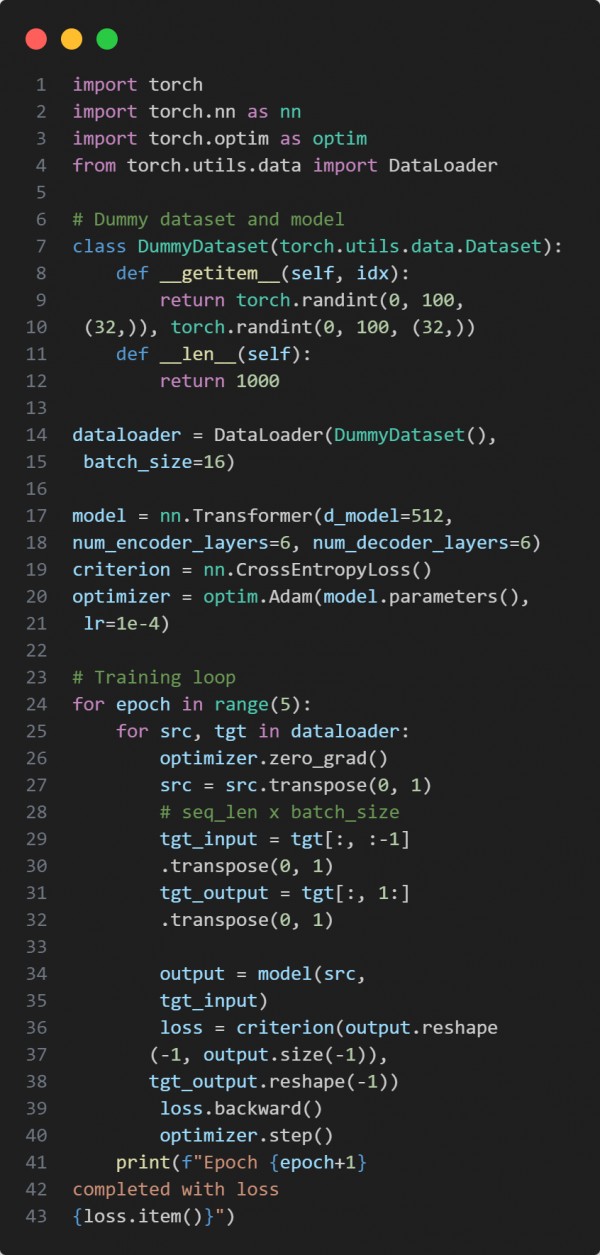

Here is the code snippet below:

In the above code we are using the following key points:

-

PyTorch DataLoader to iterate over batches.

-

Transformer model with encoder and decoder layers.

-

CrossEntropyLoss and Adam optimizer for training.

-

Teacher forcing with shifted target sequences for decoder input.

Hence, this setup provides a complete and efficient framework to train Transformer-based encoder-decoder models in PyTorch.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP