You can write a script to preprocess human feedback datasets for LLM reinforcement learning by cleaning, tokenizing, and formatting prompt-response-reward pairs into a structured format ready for training.

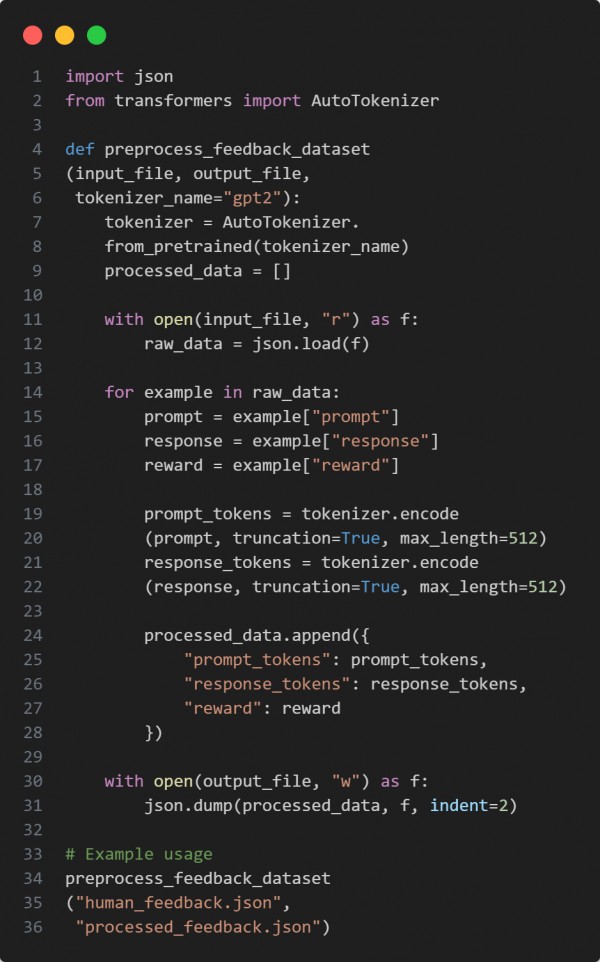

Here is the code snippet below:

In the above code we are using the following key points:

-

JSON parsing to load raw human feedback data.

-

Tokenization of prompts and responses using Hugging Face tokenizers.

-

Truncation and formatting to prepare data for LLM consumption.

Hence, this ensures your dataset is clean, consistent, and properly formatted for efficient LLM training.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP