To implement dynamic sampling techniques like top-k sampling and top-p (nucleus) sampling for text generation with GPT-3, you adjust the probability distribution of the next token selection. Both techniques help improve diversity and control randomness.

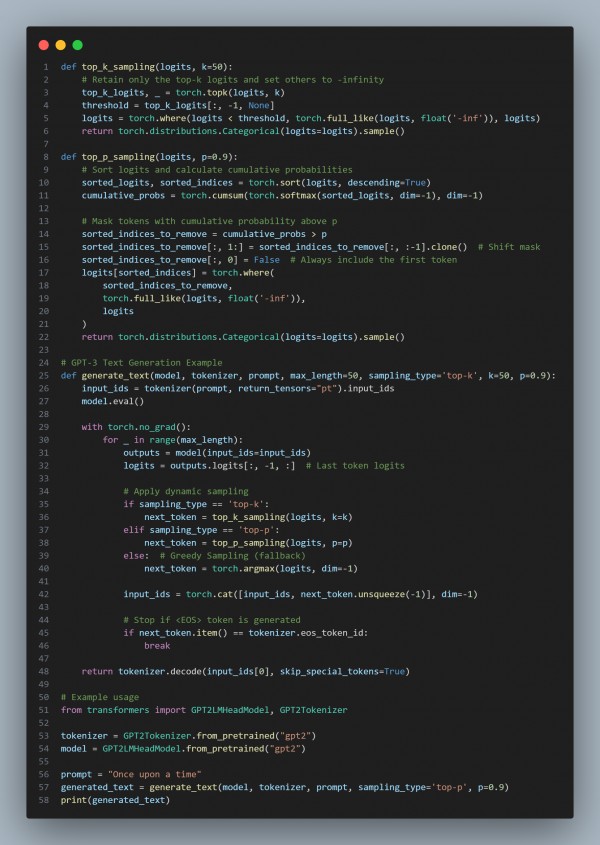

Here is the code you can refer to:

In the above code, we are using the following:

-

Top-K Sampling:

- Limits the selection to the kkk-most probable tokens.

- Use it when you want to control randomness.

-

Top-P Sampling:

- Selects tokens cumulatively until the probability reaches ppp.

- Provides adaptive flexibility based on the distribution.

-

Diversity Control:

- Both methods improve over greedy sampling, producing more coherent and creative outputs.

Hence, by referring to this, you can implement dynamic sampling techniques like top-k sampling and top-p sampling for text generation with GPT-3

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP